Since the release of ChatGpt in 2022, businesses have been increasingly interested in generative AI (GenAI) and its implications for their operations. Opinions are strongly divided between it being just another fad and the possibility of eliminating jobs. This buzz has caused many organizations to rapidly adopt different AI tools into their operations to see if benefits can be achieved.

As with many new tools, teams and departments may adopt these technologies without following standard IT procurement processes, letting business needs drive adoption. It is no longer a question of whether companies will adopt GenAI but how to use it securely and ethically as part of the business.

What is Generative AI?

Generative AI, a subset of artificial intelligence, is tasked with a unique mission: to create. Unlike its counterpart, which primarily sifts through existing data to make predictions or analyze patterns, Generative AI takes a bold step forward, delving into the realm of creativity and imagination.

At its core, Generative AI harnesses the power of advanced algorithms and large language models to create new data, content, or artifacts without human intervention. This groundbreaking technology opens possibilities, from generating lifelike images and videos to crafting eloquent prose and music compositions.

How Businesses Use GenAI

Across various sectors, GenAI is being harnessed to drive innovation, streamline processes, and unlock new opportunities for growth and competitiveness.

One of GenAI’s primary applications is content creation and personalization. By automating content creation processes and generating personalized recommendations, businesses can deliver tailored marketing materials and offers to their customers, enhancing engagement and satisfaction. Additionally, GenAI enables organizations to accelerate innovation cycles by generating design ideas, prototypes, and customized products and services that meet the unique needs of their target audience.

Data augmentation and synthesis represent another critical area where GenAI is making significant strides. By generating synthetic data training data for machine learning models, businesses can overcome data scarcity or cost issues, improving the performance and robustness of their AI systems. This, in turn, empowers organizations to make more accurate predictions and insights, driving better decision-making and outcomes.

For customer interaction and support, GenAI-powered chatbots and virtual assistants revolutionize how businesses engage with their customers. These AI-driven agents automate customer support and service interactions, providing personalized assistance and recommendations, enhancing the overall customer experience, and driving loyalty and retention.

GenAI is also transforming creative industries and expression, enabling artists, musicians, and writers to explore new creative possibilities and push the boundaries of creativity and expression. GenAI is reshaping how we create and consume content in the digital age, from art generation and music composition to literature and storytelling.

In areas such as simulation and forecasting, GenAI enables organizations to simulate complex systems and scenarios, forecast outcomes, and optimize processes. By leveraging GenAI-powered simulations, businesses can make data-driven decisions in finance, supply chain management, and risk management, mitigating risks and seizing opportunities in an increasingly volatile and uncertain business environment.

GenAI also plays a critical role in enhancing business security and fraud detection measures. By detecting anomalies, patterns, and trends in data, GenAI-powered systems can identify security threats and fraudulent activities, safeguarding business assets, data, and reputation from potential risks and vulnerabilities.

What are the GenAI Security Risks for Organizations?

Despite its immense potential for innovation and advancement, GenAI also carries a wide range of business risks.

GenAI can be used by bad actors to damage a business’s reputation by creating Deepfakes, which are convincing videos, audio recordings, or written content to convey misinformation. These videos and other media may feature business representatives or frame the business misbehaving, damaging their integrity and credibility. Even if the media is later proven fake, the damage is already done once released, and massive efforts are required to repair it.

Attackers may also target businesses using GenAI, especially when it is trained on organizational data. In these scenarios, attackers seek access to accounts with access to query the AI. They use this access to trick or manipulate the AI to break out of defined sandboxes and provide sensitive data in the output. Businesses suffering data leakage of this type may inadvertently violate privacy regulations like GDPR or CCPA when this occurs.

In a similar vein, attackers may also try to poison the AI being used by organizations to skew findings. They can do this to add bias to conclusions derived by the AI that may impact business decisions. Attacks of this nature can be subtle and complex, making it unlikely that a business will discover the underlying problem until long after it has committed to decisions based on the data.

How can an organization protect itself from GenAI Risks?

AI risk management builds on businesses’ approaches to protect their traditional IT assets. Building an enterprise-grade GenAI security solution requires more than a single tool or strategy; it requires a collective of them to cover all the angles where they can create risk for the organization.

Investing in AI security solutions helps build the foundation for this security. These platforms offer sophisticated features like model validation, data integrity checks, and access control validation, which are crucial for mitigating risks inherent in GenAI usage. Access controls are one of the most critical aspects of ensuring security on GenAI platforms, as they are the gatekeepers to attackers abusing the system. Strong controls ensure that only authorized personnel can access and manipulate GenAI systems and data, minimizing the risk of unauthorized access or misuse.

Adherence to regulatory compliance is non-negotiable. When deploying GenAI systems, organizations must comply with GDPR, CCPA, and HIPAA regulations. Limiting sensitive data for training GenAI models and implementing robust data protection measures are essential to ensure data’s legal and ethical use and mitigate the risk of regulatory penalties.

Regular security audits are essential to identify and address potential vulnerabilities in GenAI systems. These audits encompass vulnerability scanning, penetration testing, and compliance checks, ensuring that GenAI systems adhere to regulatory requirements and industry standards.

Monitoring GenAI models’ behavior in production environments is vital. Techniques such as anomaly detection and real-time monitoring enable organizations to promptly detect deviations from expected behavior and take corrective action to mitigate potential security incidents or performance issues.

Securing Your Gen AI with Savvy

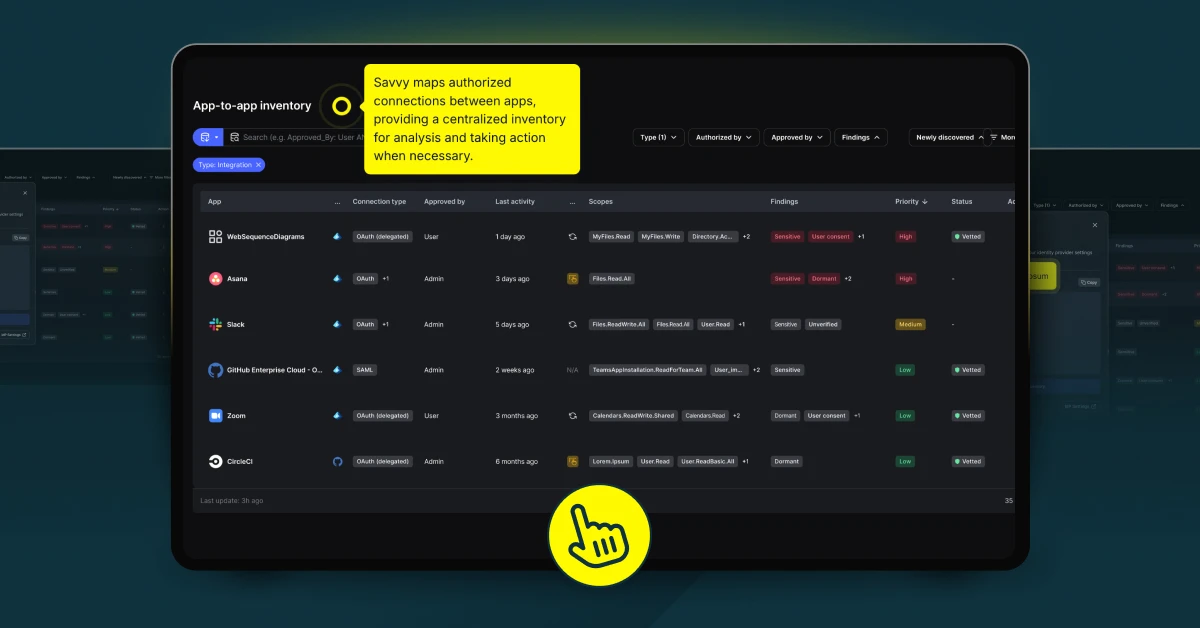

Thanks to Savvy’s user-friendly platform and expert support, securing SaaS GenAI solutions can be swift and seamless. Organizations can rapidly integrate Savvy’s capabilities using a streamlined setup process, allowing for quick connection with existing systems and SaaS applications and minimizing disruption to daily operations.

Savvy’s solution automatically learns about your organization’s connected applications, even those created outside the normal IT provisioning process. This rapid deployment enables organizations to secure their GenAI platforms without impacting their users.

Learn how Savvy can transform your organization’s approach to GenAI SaaS security and schedule a demo to see Savvy in action.

FAQ

In what ways can GenAI-powered chatbots be vulnerable to exploitation by malicious actors for social engineering attacks?

- Malicious actors may exploit vulnerabilities in chatbot algorithms to manipulate users into divulging sensitive information or performing harmful actions, highlighting the importance of robust security measures and user education.

How can businesses ensure transparency and accountability in the decision-making processes of GenAI systems, especially in regulated industries?

- Implementing explainable AI techniques and maintaining comprehensive documentation of model training and decision-making processes can enhance transparency and accountability in GenAI systems.

What are the potential privacy risks associated with GenAI systems generating and sharing synthetic data, and how can businesses mitigate these risks?

- Businesses should establish clear data anonymization and privacy policies and implement stringent access controls and encryption measures to protect synthetic data from unauthorized access or misuse.